DiffusionNOCS: Managing Symmetry and Uncertainty in Sim2Real Multi-Modal Category-level Pose Estimation

Abstract

This work addresses the challenging problem of category-level pose estimation. Current state-of-the-art methods for this task face challenges when dealing with symmetric objects and when attempting to generalize to new environments solely through synthetic data training. In this work, we address these challenges by proposing a probabilistic model that relies on diffusion to estimate dense canonical maps crucial for recovering partial object shapes as well as establishing correspondences essential for pose estimation. Furthermore, we introduce critical components to enhance performance by leveraging the strength of the diffusion models with multi-modal input representations. We demonstrate the effectiveness of our method by testing it on a range of real datasets. Despite being trained solely on our generated synthetic data, our approach achieves state-of-the-art performance and unprecedented generalization qualities, outperforming baselines, even those specifically trained on the target domain.

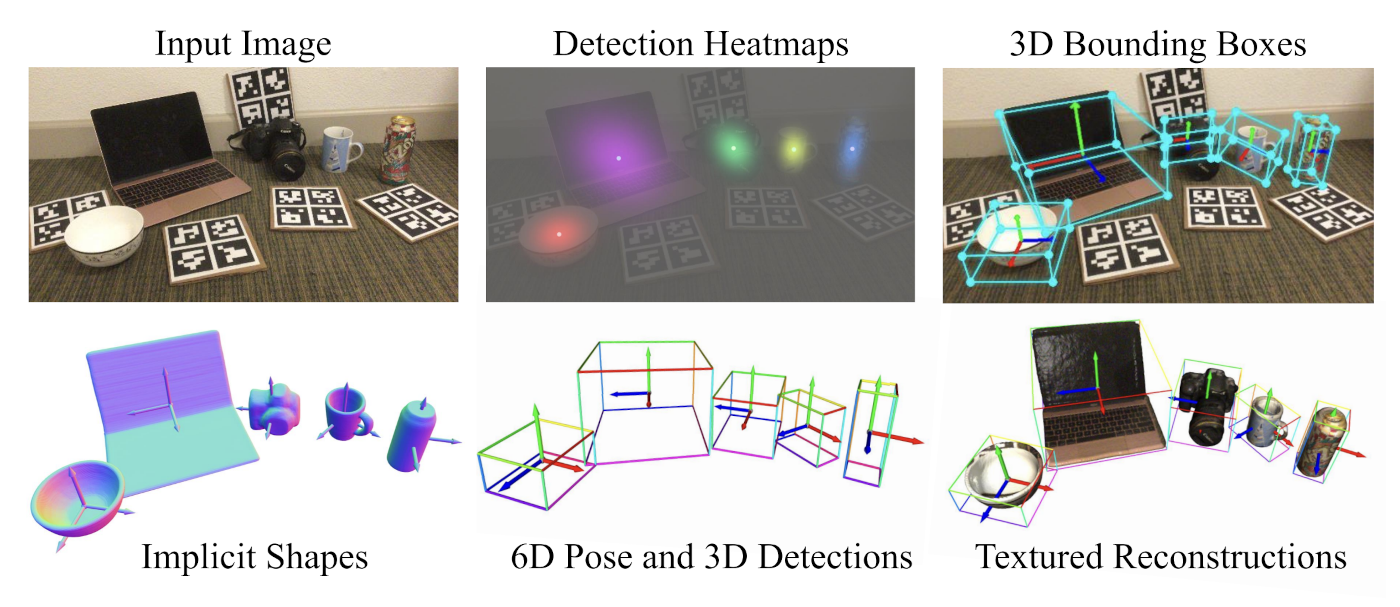

Method

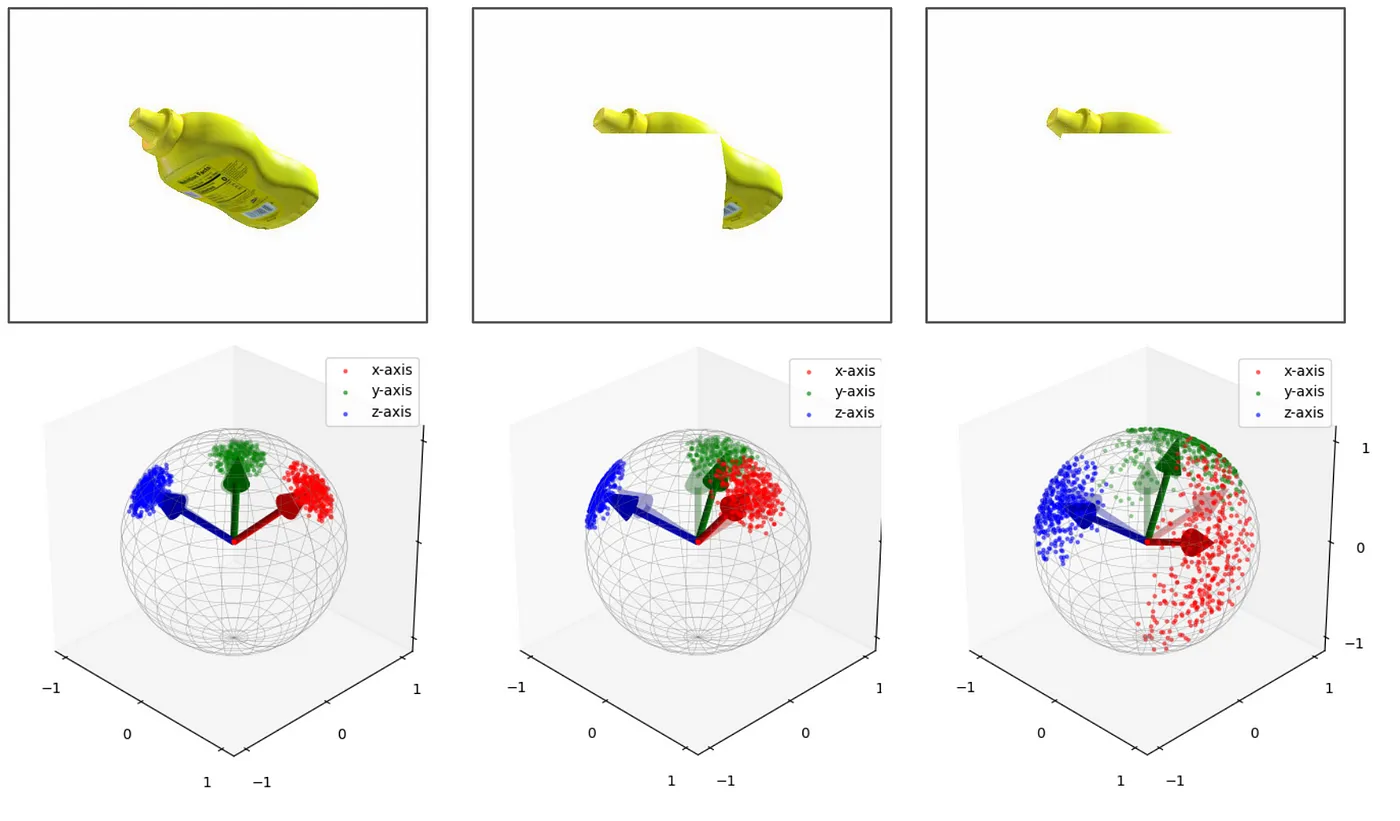

Handling Symmetry

Thanks to its probabilistic nature, DiffusionNOCS can handle symmetrical objects without a need for special data annotations and heuristics typical for many SOTA methods.

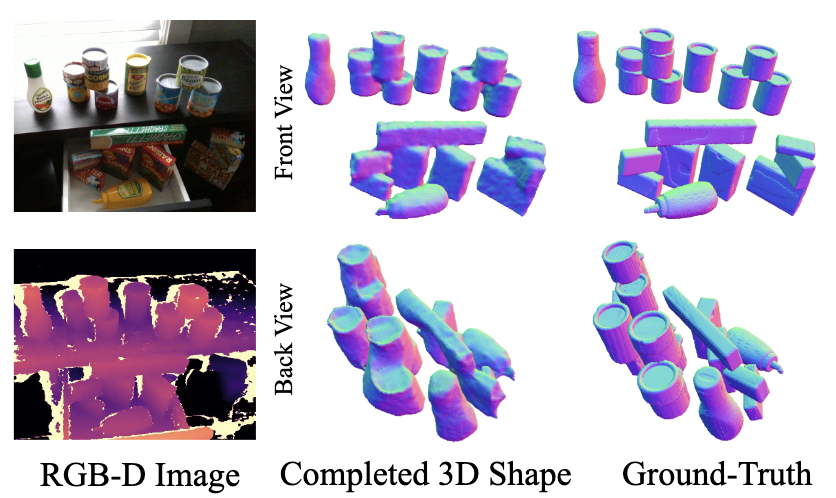

Selectable Inputs

A single network can be used to generate reconstructions from various inputs without re-training since our method supports selectable inputs.

NOCS Real 275 Benchmark

DiffusionNOCS shows the best results across SOTA baselines trained on synthetic data on a de facto standard benchmark for category-level pose estimation, NOCS Real (Wang et al., 2019).

Generalization Benchmark

To demonstrate how existing state-of-the-art (SOTA) methods perform in various challenging real-world environments, we introduce a zero-shot Generalization Benchmark consisting of three datasets commonly used for instance-level pose estimation, YCB-V (Xiang et al., 2018), HOPE (Tyree et al., 2022), and TYO-L (Hodan et al., 2018). We show the best overall performance even when compared to methods trained on real data.

Our Related Projects

Citation

@inproceedings{diffusionnocs,

title={DiffusionNOCS: Managing Symmetry and Uncertainty in Sim2Real Multi-Modal Category-level Pose Estimation},

author={Takuya Ikeda, Sergey Zakharov, Tianyi Ko, Muhammad Zubair Irshad, Robert Lee, Katherine Liu, Rares Ambrus, Koichi Nishiwaki},

journal={arXiv},

year={2024}

}